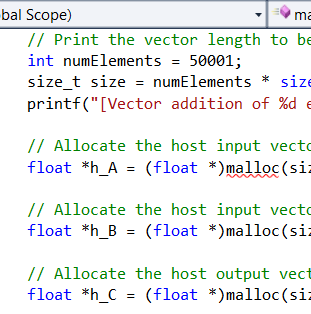

The NVIDIA CUDA Example simpleCallback shows how to use the CUDA function cudaStreamAddCallback to introduce a callback once CUDA has processed the stream up to the point that the callback was added. This may be used to asynchronously call kernels and wait for their completion or provide status updates on processing.

The key function in the example is

checkCudaErrors(cudaStreamAddCallback(workload->stream, myStreamCallback, workload, 0));

That injects the callback into the stream. The example creates several workers that are executed asynchronously but instead of using cudaDeviceSynchronize block the CPU until all the kernels complete, it uses a more subtle barrier synchronization and waits for that.

A Stackoverflow response from user jet47 concisely shows how to use callbacks with classes using a static member function:

class MyClass

{

public:

static void CUDART_CB Callback(cudaStream_t stream, cudaError_t status, void *userData);

private:

void callbackFunc();

};

void CUDART_CB MyClass::Callback(cudaStream_t stream, cudaError_t status, void *userData)

{

MyClass* thiz = (MyClass*) userData;

thiz->callbackFunc();

}

void MyClass::callbackFunc()

{

// implementation here

}

MyClass* obj = new MyClass;

cudaStreamAddCallback(GpuStream, MyClass::Callback, obj, 0);